|

Bharat Runwal

I graduated from IIT Delhi with B.Tech in Electrical Engineering (Power And Automation). At IITD, i was a member of MISN group, where I completed my B.Tech thesis "Robustifying GNN against Poisoning Adversarial Attacks using Weighted Laplacian" under the guidance of Prof. Sandeep Kumar .

My research interest spans Computer Vision, Multimodal Learning, Compositionality, Efficient ML and Continual Learning. However, I'm always open to explore new research directions, and develop interesting real-world projects.

Email /

Google Scholar /

Twitter /

Linkedin /

Github

|

|

News

- October 2025: Checkout our Family of Granite 4.0 Hybrid Models, Huggingface.

- December 2024: Our work From PEFT to DEFT: Parameter Efficient Finetuning for Reducing Activation Density in Transformers is accepted at the AAAI Main 2025.

- September 2024: Our new library Continual-Diffusers : Continual learning with Diffusion models it out now !!

- September 2024: Our work on SOUL: Unlocking the Power of Second-Order Optimization for LLM Unlearning is accepted at the EMNLP Main Conference, 2024.

- April 2024: Our work on Uncovering the Hidden Cost of Model Compression is accepted at the Prompting in Vision workshop, CVPR, 2024.

- September 2023: Joined OPTML Group @ MSU as a visiting research scholar

- March 2023: Our work on Pruning CodeBERT for Improved Code-to-Text Efficiency is accepted to the Sparsity in Neural Network (SNN) workshop,ICLR 2023

- November 2022: Our work on APP: Anytime Progressive Pruning is accepted to the Continual Lifelong Learning (CLL) workshop at ACML, 2022 and SlowDNN workshop, 2023.

- October 2022: I am starting as a Research intern at Mila from November.

- August 2022: Our work on "Received Signal Modeling and BER Analysis for Molecular SISO Communications" is accepted to the ACM NanoCom 2022 2022!

- July 2022: I recieved the Best Student Paper Award for my work on "Robustifying GNN Via Weighted Laplacian" at SPCOM 2022!

- July 2022: Our work on APP: Anytime Progressive Pruning is accepted to the Sparsity in Neural Network (SNN) workshop, 2022.

- June 2022: My B.Tech Thesis work on "Robustifying GNN Via Weighted Laplacian" is accepted at SPCOM 2022!

- June 2022: Our work on APP: Anytime Progressive Pruning is accepted to the Dynamic Neural Network (DyNN) workshop at ICML, 2022.

- January 2022: I am selected to be a part of the Research Week With Google organised by Google Research, India

- January 2022: I will be serving as a teaching assistant for the ELL888: Advanced Machine Learning(Foundations in High-Dimensional and Graph ML) at IIT Delhi taught by Prof. Sandeep Kumar and Prof. Jaydeva for the Spring 2022 semester.

- December 2021: I will be attending NeurIPS 2021, happy to chat with you there!

- October 2021: I will be attending ICCV 2021, happy to chat with you there!

Research Experience

|

Research internJan 2023 - September 2023

Collaborators: Yilun Du, Prof. Josh Tenenbaum

Research Topic: Continual Generative Modeling

|

|

Visiting ScholarJan 2022 - March 2023

CERC-AAI Lab, Mila - Quebec AI Institute,

Collaborators: Diganta Misra , Irina Rish

Research Topic: Sparsity, Continual Learning

|

|

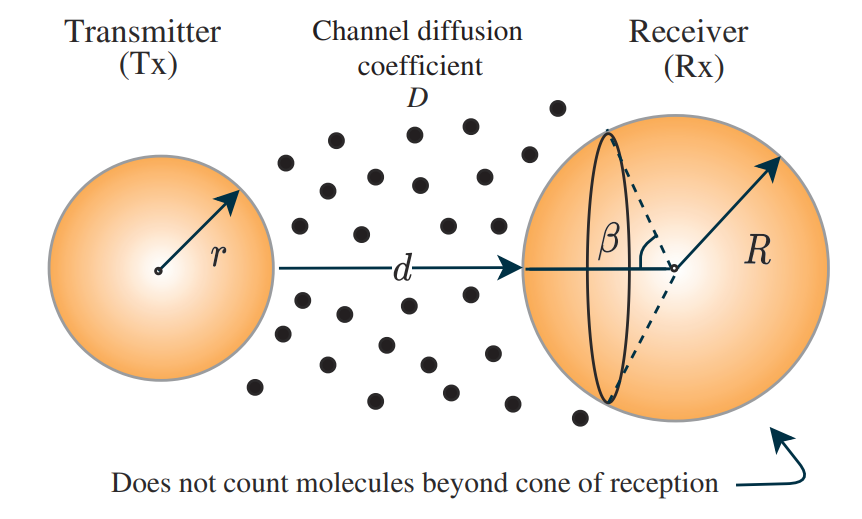

Research internJune 2021 - Oct. 2021

Internet Of Everything (IoE) Group, University Of Cambridge

Collaborators: Arunava Das, Dr Oktay Cetinkaya , Prof. Özgür B. Akan

Research Topic: Received Signal Modeling and BER Analysis for Molecular SISO Communications

Accepted to the ACM NanoCom 2022.

|

|

Research InternOct. 2020 - May 2021

Deep Data Lab, HPI Potsdam, Germany

Supervisor: Prof. Gerad De Melo

Research Area: NLP

|

Work Experience

|

Research EngineerMarch 2025 - Current

IBM Research

Manager: Rameswar Panda (MIT-IBM Watson AI Lab)

Worked on large-scale training optimizations and mid-training at IBM Research, as part of the core team behind the Family of Granite 4.0 models.

Developing RL environments for cybersecurity agents to enhance

adaptive reasoning in automated pentesting and threat hunting.

|

|

Research EngineerMarch 2024 - August 2024

Simbian

Spearheaded the development of the Security Accelerator, improving threat hunting and detection in the cybersecurity domain.

|

|

AI Research InternJune 2021 - August 2021

AlphaICs

Research Area: Quantization of Neural Networks and Graph Neural Networks(GNNs)

|

|

Junior Machine Learning EngineerJune 2021 - August 2021

Omdena

Project: Helping People with Visual Impairment to Easily Use Buses through Computer Vision

|

|

NLP InternMay 2021 - June 2021

Zevi

Worked on building a vernacular search engine for e-commerce applications with features like price tag detection from query, autocomplete,spell check.

|

|

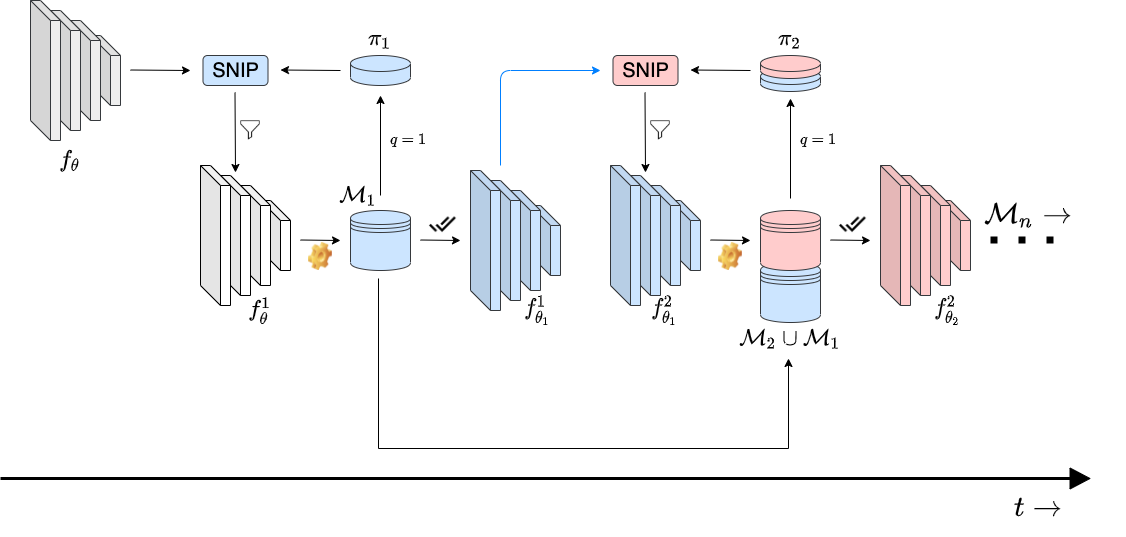

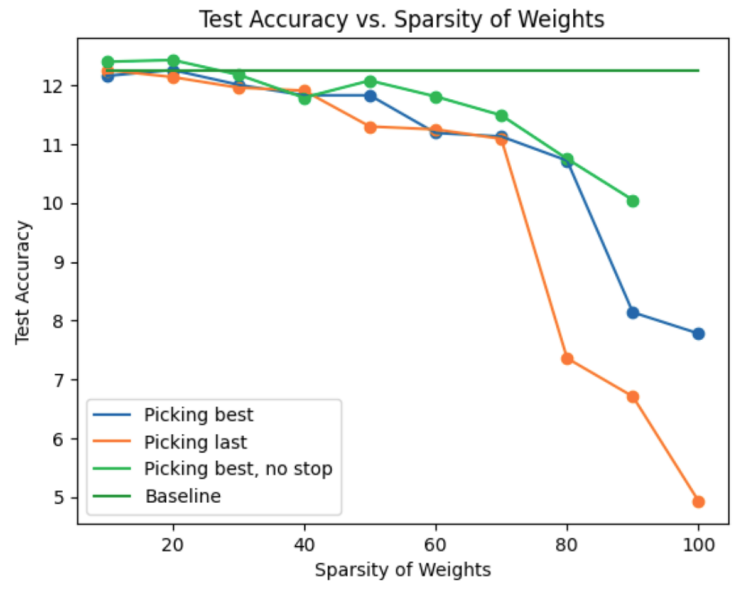

APP: Anytime Progressive Pruning

Diganta Misra*,

Bharat Runwal*,

Tianlong Chen,

Zhangyang Wang,

Irina Rish

DyNN workshop at ICML,2022

SNN, 2022

CLL workshop at ACML, 2022

SlowDNN workshop, 2023

project /

paper /

webpage /

abstract /

bibtex

With the latest advances in deep learning, there has been a lot of focus on the online learning paradigm due to its relevance in practical settings. Although many methods have been investigated for optimal learning settings in scenarios where the data stream is continuous over time, sparse networks training in such settings have often been overlooked. In this paper, we explore the problem of training a neural network with a target sparsity in a particular case of online learning: the anytime learning at macroscale paradigm (ALMA). We propose a novel way of progressive pruning, referred to as \textit{Anytime Progressive Pruning} (APP); the proposed approach significantly outperforms the baseline dense and Anytime OSP models across multiple architectures and datasets under short, moderate, and long-sequence training. Our method, for example, shows an improvement in accuracy of $\approx 7\%$ and a reduction in the generalization gap by $\approx 22\%$, while being $\approx 1/3$ rd the size of the dense baseline model in few-shot restricted imagenet training. We further observe interesting nonmonotonic transitions in the generalization gap in the high number of megabatches-based ALMA. The code and experiment dashboards can be accessed at \url{https://github.com/landskape-ai/Progressive-Pruning} and \url{https://wandb.ai/landskape/APP}, respectively.

@misc{misra2022app,

title={APP: Anytime Progressive Pruning},

author={Diganta Misra and Bharat Runwal and Tianlong Chen and Zhangyang Wang and Irina Rish},

year={2022},

eprint={2204.01640},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

|

|

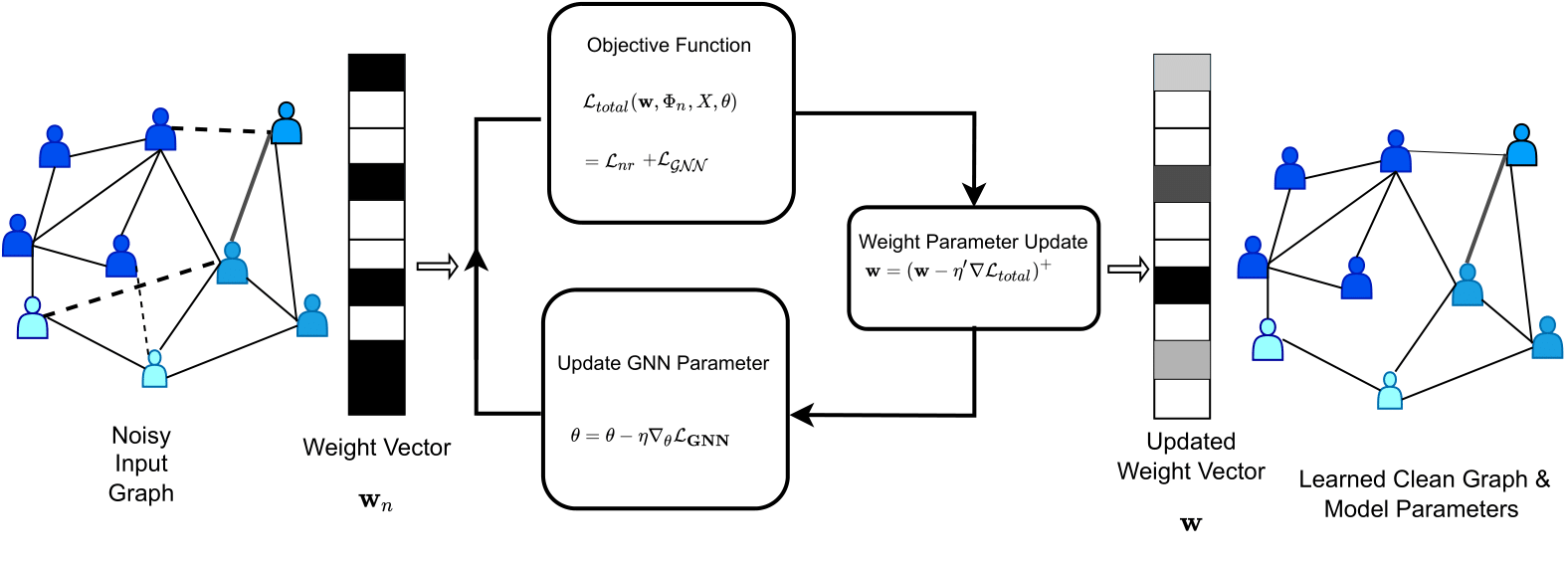

Robustifying GNN Via Weighted Laplacian (Best Student Paper Award)

Bharat Runwal,

Vivek Dahiya,

Sandeep Kumar

SPCOM, 2022

|

|

Received signal modeling and BER analysis for molecular SISO communications

Arunava Das, Bharat Runwal, O. Tansel Baydas, Dr Oktay Cetinkaya , Prof. Özgür B. Akan

ACM NanoCom 2022

|

|

Pruning CodeBERT for Improved Code-to-Text Efficiency

Alex Gu, Ria Sonecha, Saaketh Vedantam, Bharat Runwal, Diganta Misra

Sparsity in Neural Networks(SNN) workshop, ICLR 2023

|

|

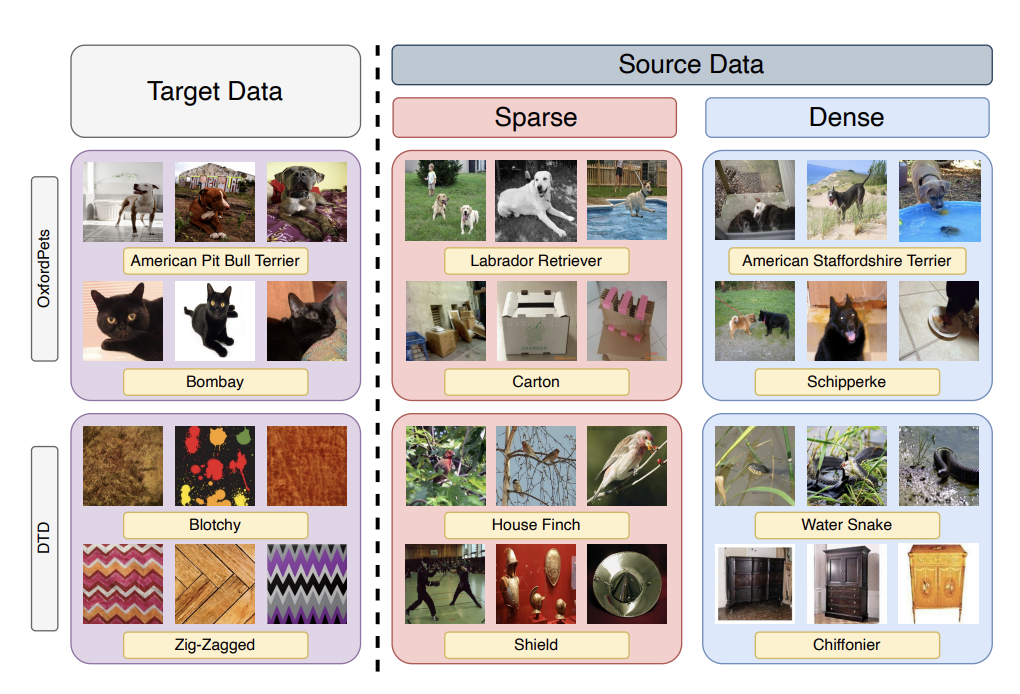

Uncovering the Hidden Cost of Model Compression

Diganta Misra* ,

Muawiz Chaudhary,

Agam Goyal*,

Bharat Runwal*,

Pin Yu Chen

PiV @ CVPR, 2024

|

|

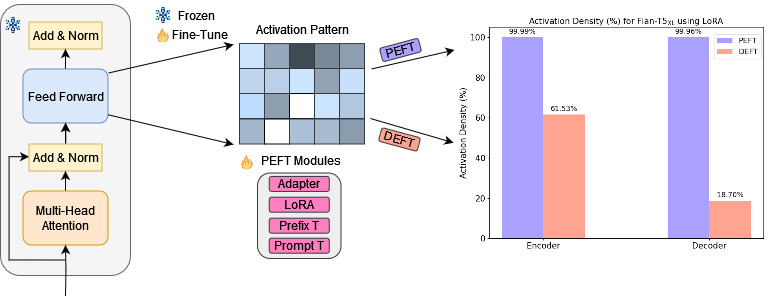

From PEFT to DEFT: Parameter Efficient Finetuning for Reducing Activation Density in Transformers New!

Bharat Runwal,

Tejaswini Pedapati (IBM),

Pin Yu Chen (IBM)

AAAI Main 2025

|

|

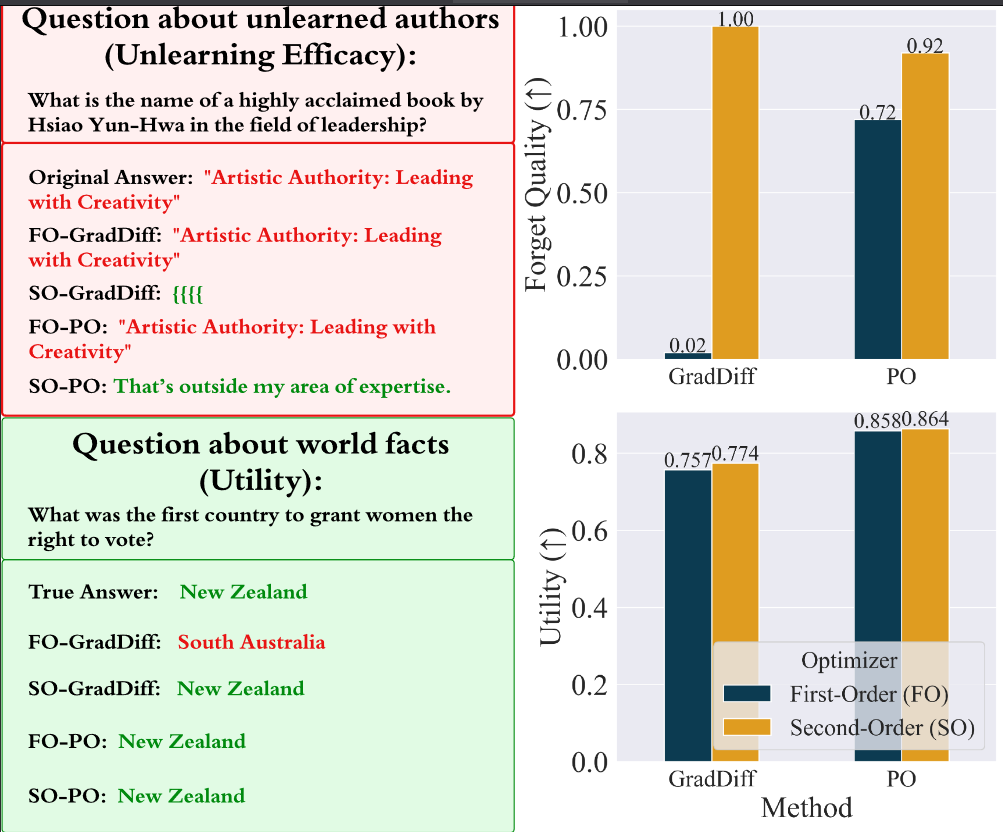

SOUL: Unlocking the Power of Second-Order Optimization for

LLM Unlearning New!

Jinghan Jia, Yihua Zhang, Yimeng Zhang, Jiancheng Liu, Bharat Runwal, James Diffenderfer, Bhavya Kailkhura, Sijia Liu

EMNLP Main Conference, 2024

|

|

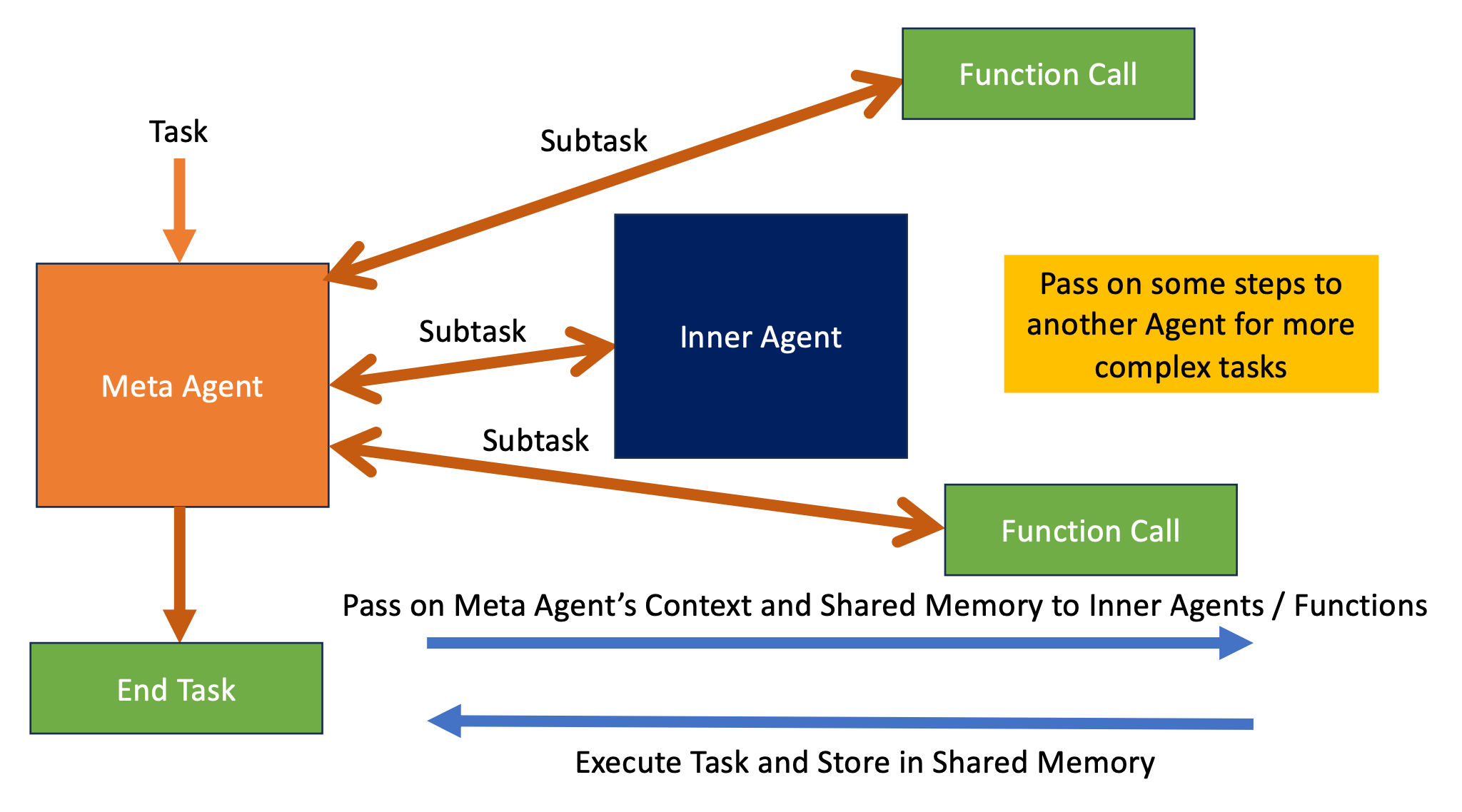

TaskGen: A Task-Based, Memory-Infused Agentic Framework using StrictJSON New!

John Chong Min Tan, Prince Saroj, Bharat Runwal, Hardik Maheshwari, Brian Lim Yi Sheng, Richard Cottrill, Alankrit Chona, Ambuj Kumar, Mehul Motani

|

|